I had some spare parts and wanted to setup a Proxmox Backup Server for my

3-node ceph-enabled cluster. It didn’t go strictly as planned, but I found

a way to ditch GPUs on my nodes, which then turned out to be too good to be

true. Now, here I am with extra NICs and fancy names returned by ip addr.

Lemons -> Lemonade

WoL, How?

As of now, my cluster is tethered only to a power outlet with a wireless network uplink. The cluter’s router is a (Teltonika RUT240) , which supports ZeroTier through a plug-in. Being able to turn machines on over an overlay network is a plus for me. I don’t use it across the globe, but this keeps thing simple and neatly isolated from smart home appliances connected to the main router.

Edit (2024-08-26): Simply appending

WakeOnLan=magicoption to .link files through out this post is a better approah. I wish I had read the manual throughly last time.

I already have set WoL service thanks to Martin’s SO answer, my addendum is as follows:

/etc/systemd/system/wol@.service:

|

|

This way single or multiple network links can be enabled for WoL.

|

|

If --now is not used or the service is not started manually, given very next

power cycle was a shutdown rather than a reboot, WoL would not work. Because

WoL only survives till next boot up. So it must be enabled at least in every

boot up that will end up shutting down, or in general at each boot, hence the

service.

What the f…? etherium2, entropy, englishmaninnewyork1?

Torvalds et al. have not added new network device types for Etherium v2 miners, inevitable decay of our existance, nor devices belonging to humble subjects of Charles III that entered the city of New York. At least not yet, that is.

Renaming an interface is explained in both Arch Wiki and

Proxmox Docs. Proxmox naming convention suggests prefix of either

eth or en to have the renamed interfaces shown on their web UI. So, above

are just a play of words on that for comedical purposes.

I settled on enlab for NICs connected to my homelab switch, and encephN for

the CEPH network’s NICs, where N is a digit.

This is pristine result of ip addr after adding the second X540-AT2 card:

|

|

[Match] section can be read on man7 systemd.link page. I am using PermanentMACAddress to be able to target specific cards

/etc/systemd/network/10-enlab.linkon pve3:1 2 3 4[Match] PermanentMACAddress=24:4b:fe:df:bb:fe [Link] Name=enlab/etc/systemd/network/10-enceph0.linkon pve3:1 2 3 4[Match] PermanentMACAddress=6c:92:bf:48:89:4a [Link] Name=enceph0/etc/systemd/network/10-enceph1.linkon pve3:1 2 3 4[Match] PermanentMACAddress=6c:92:bf:48:89:4b [Link] Name=enceph1- New

/etc/network/interfaceson pve3:1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37auto lo iface lo inet loopback # previously enp6s0 # currently enp8s0 auto enlab iface enlab inet manual # previously enp4s0f0 # currently enp6s0f0 auto enceph0 iface enceph0 inet6 static address fd0c:b219:7c00:2781::3 netmask 125 mtu 9000 post-up /sbin/ip -f inet6 route add fd0c:b219:7c00:2781::1 dev enceph0 post-down /sbin/ip -f inet6 route del fd0c:b219:7c00:2781::1 dev enceph0 # previously enp4s0f1 # currently enp6s0f1 auto enceph1 iface enceph1 inet6 static address fd0c:b219:7c00:2781::3 netmask 125 mtu 9000 post-up /sbin/ip -f inet6 route add fd0c:b219:7c00:2781::2 dev enceph1 post-down /sbin/ip -f inet6 route del fd0c:b219:7c00:2781::2 dev enceph1 auto vmbr0 iface vmbr0 inet static address 192.168.240.13/24 gateway 192.168.240.1 bridge-ports enlab bridge-stp off bridge-fd 0 source /etc/network/interfaces.d/*

At this point a reboot is necessary, WoL is still going to work if that matters to anyone.

I have tried restarting systemd-udev-trigger service as per

toomas’ answer. Although, ip link showed updated NIC names,

vmbr0 was absent. Moreover, restart of networking service halted the node.

For the time being, a reboot is obligatory.

Lastly, after reboot, I removed old WoL service and added a new one with current link name:

|

|

It worked!

|

|

One step closer to homogeneous configuration management. This is the way!

What is that Psuedo-headless part?

Randomly but not seldomly, I got boot loops which seemed to have been caused by electrical problems. I am not sure on that but sometimes it would take less than a split second for computer to power itself off.

However, if power on was issued by WoL, it would boot loop indefinitely until –sometimes– it was able to boot up normally. So I had to undo this awesome headless setup.

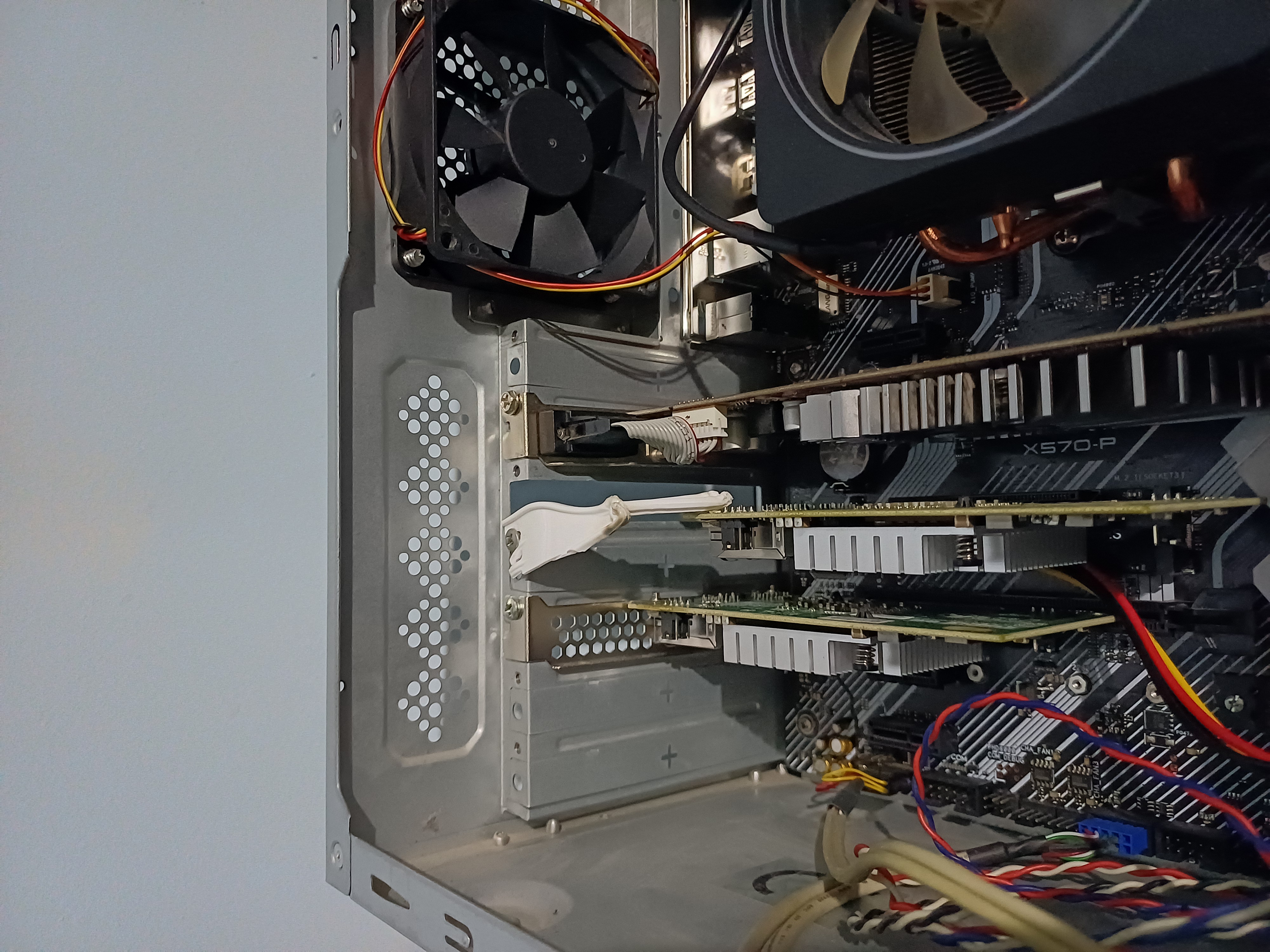

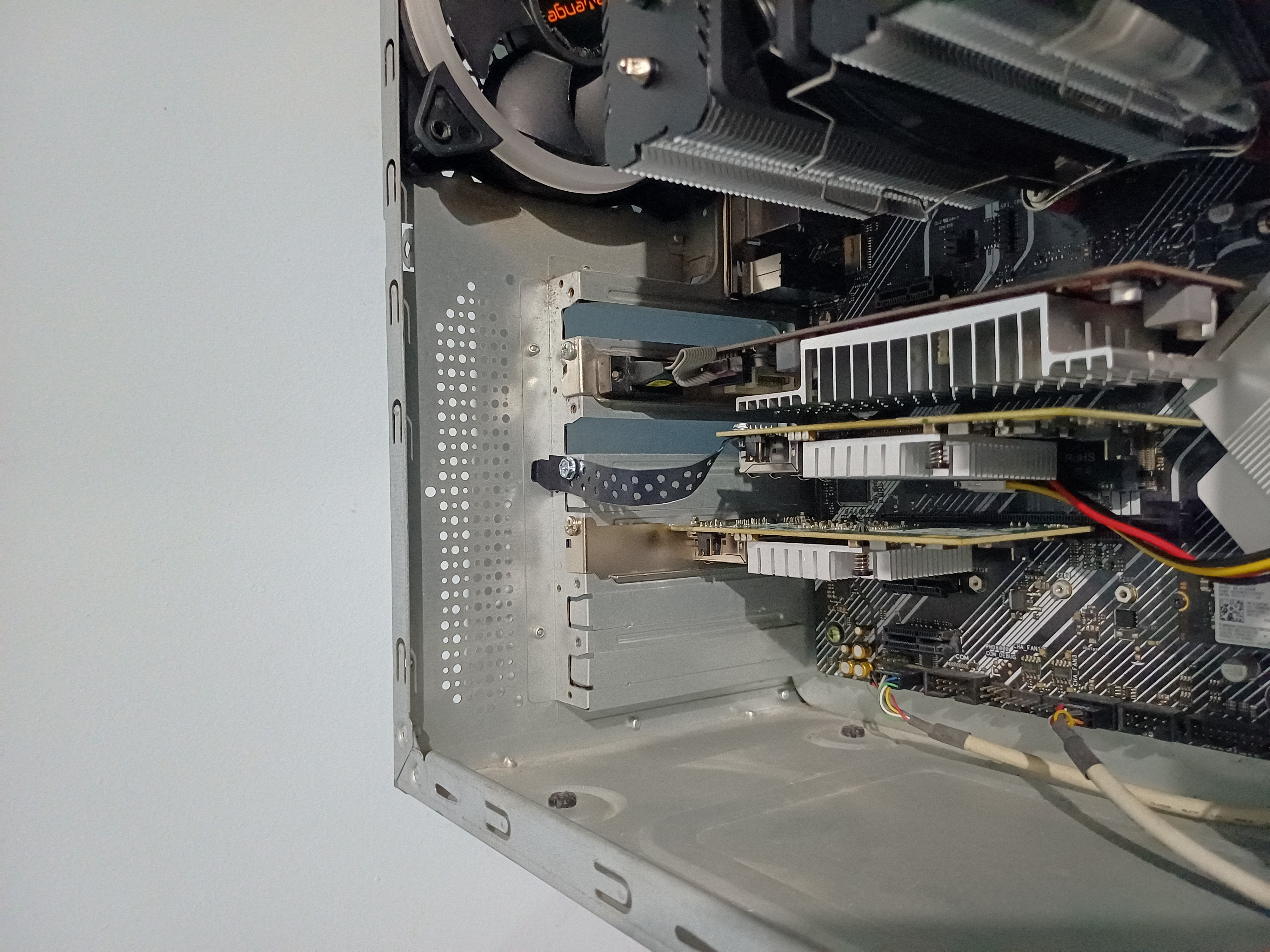

If it wasn’t for the unreliability of it, I would just remove the GPUs rather than improvising a PCIe card holder from scraps. See pictures below.

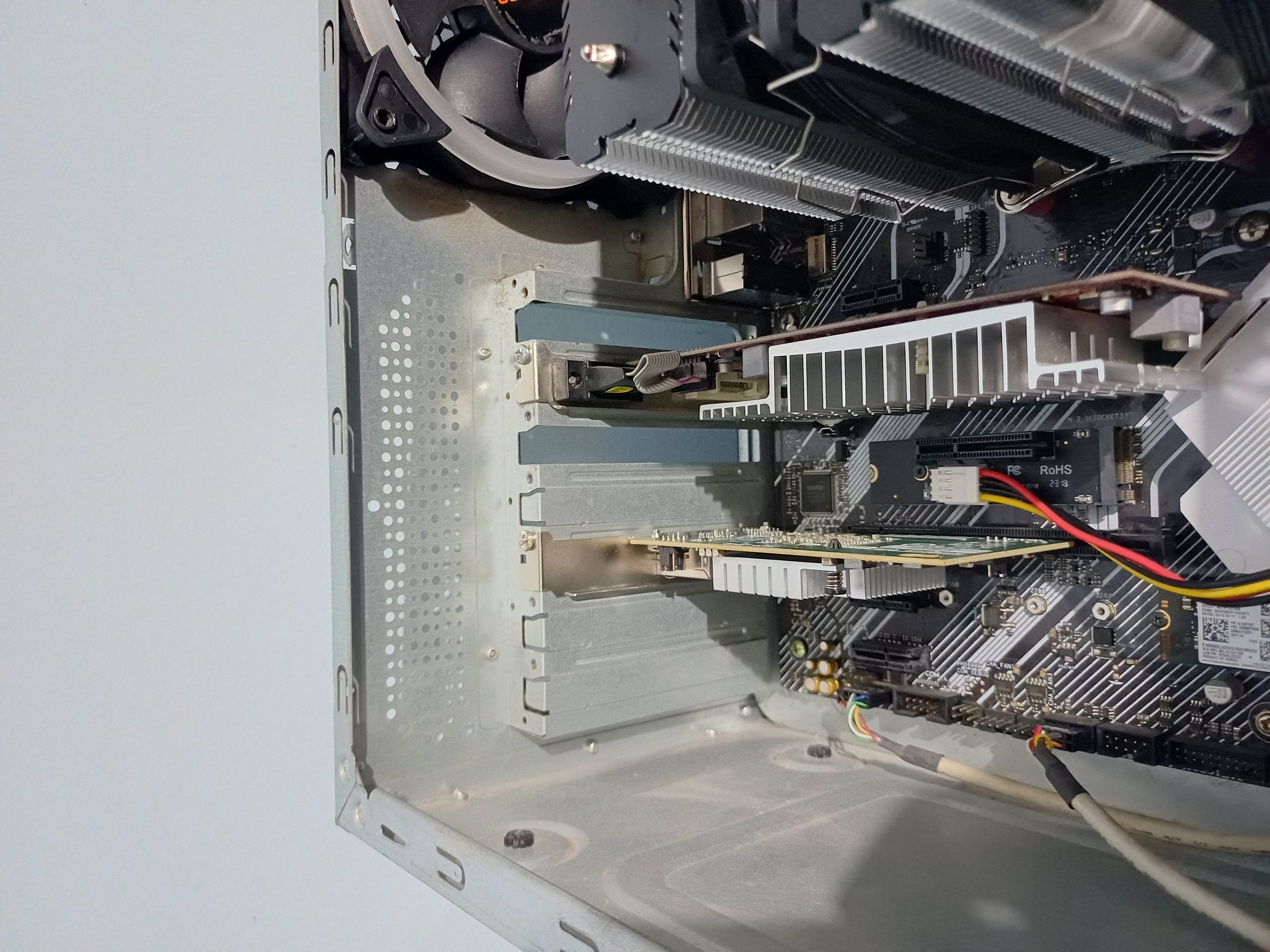

Still, using a 4x NGFF to PCIe adapter from China made the computers consistently boot up. And following check confirms it is actually electrically 4x and not only physically 4x. 20 GT/s it provides in PCIe 2.0 is enough for another X540-AT2 dual 10G card. Interestingly enough the onboard NIC uses PCIe 1.0 speeds, which makes total sense for right sizing.

|

|

At last, technically able to expand upto a 5-node cluster, more realistically a

4-node one. For now, let me still keep the device list on

/etc/network/interfaces short.

Some photography:

-

This Bent Plastic™️, though a possible fire hazard, gets the job done.

-

This Bent Metal™️ is rock solid.

-

Lastly, here is the adapter itself.

What was the main quest?

I had some DDR2-era machines lying around. Wanted to use one of them as Proxmox Backup Server for my cluster.

- An ancient Intel(R) Core(TM)2 CPU E7500 POST-ed with mere 6GB of RAM. However, could not use an old spare fanless GPU with dual DVI and I did not want to use my AMD RX6400 for this.

- Did not have a DE9 serial cable ready to set up with TTY access from the get-go.

- Had problem with network not coming up after removing the GPU.

- Thought it was the onboard R8168 (DKMS is the only way to use those cards in my opinion), the card was not working but it wasn’t the problem of course.

- Added a spare dual-gigabit 82575EB for stable operation.

- Error was Predictable Network Interface Names altering interface name

after removing the GPU, did not want to just revert to

ethXnotation.

It is a shame that I could not get WoL to work on the ancient hardware for PBS.