Another short one.

After sourcing some almost-dead second-hand enterprise SSDs and crimping some short ethernet cables, I can migrate VMs and CTs at will or with HA policy.

Homelab 2.0 is here.

ASCII diagram:

Below diagram does not show corona/internet NIC. Below is only CEPH related, physically closed loop network.

|

|

End result:

Proxmox has a Full Mesh - Routed (Simple) example for IPv4, here is my IPv6 version.

Important part is interfaces enp4s0f0 and enp4s0f1 from a dual X540-T2 10G

NIC. Taken from Michael Hampton’s answer.

Also check out A.B’s answer

root@pve1:~# cat /etc/network/interfaces:

|

|

This manual change needs to be performed for all nodes and adapted accordingly for each node’s interfaces. My setup is homogenous, cycling numbers was enough in my case.

After the change apply configuration by:

|

|

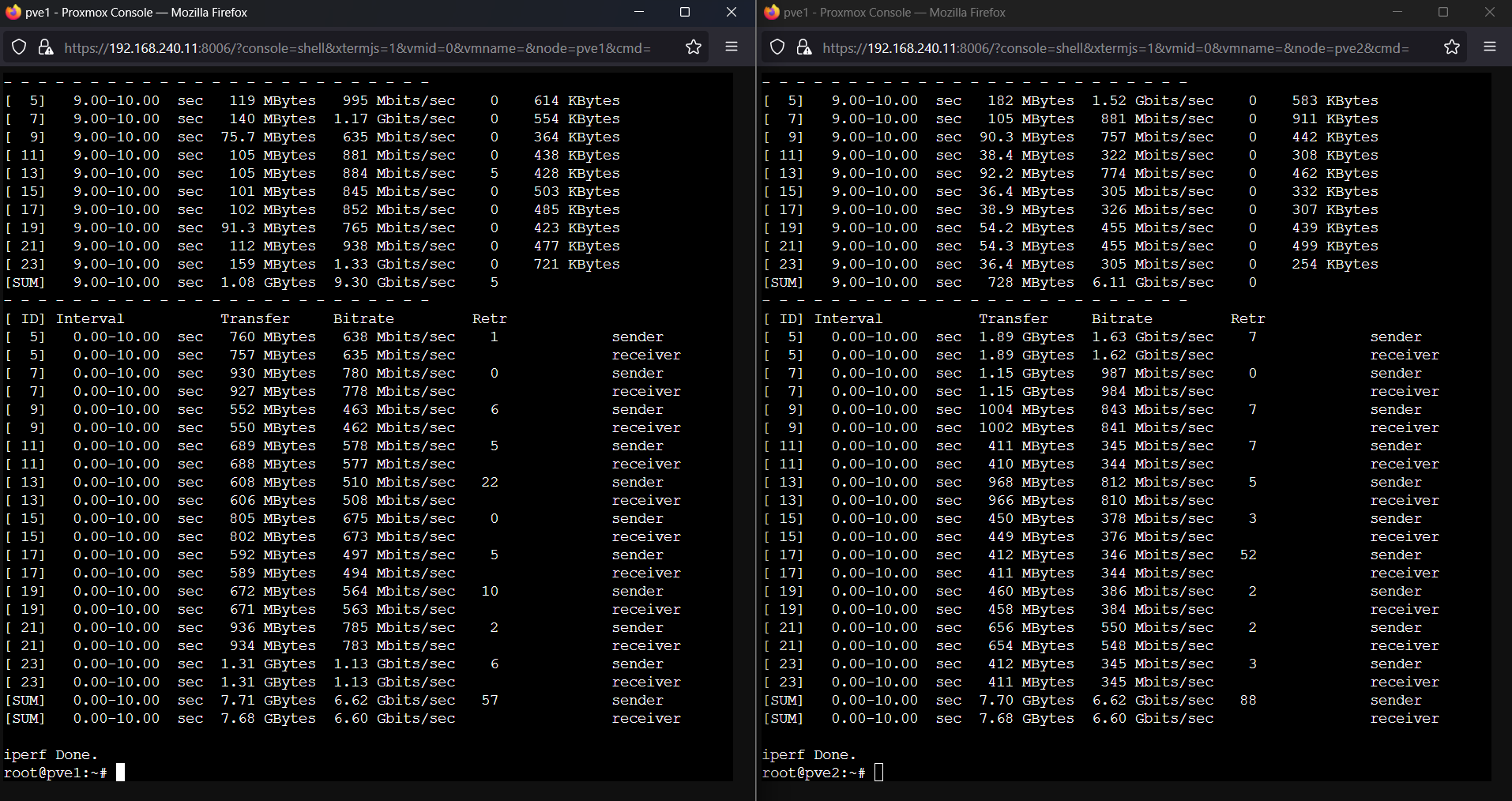

Tested by:

-

root@pve3:~# iperf3 -s --port 2345 # for pve1 -

root@pve3:~# iperf3 -s --port 6789 # for pve2 -

root@pve1:~# iperf3 -c fd0c:b219:7c00:2871::3 --port 2345 -P 4 -

root@pve2:~# iperf3 -c fd0c:b219:7c00:2871::3 --port 6789 -P 4

Previously tried:

I tried to make use of only Proxmox VE’s Web GUI.

- Only

Linux BridgeorOVS Bridgeworked. Although it should suffice for a 3 node cluster, I wanted a 5 or 7 node capable approach. - Any type of bonding ,other than broadcast that is, did not work. Would love if XOR Linux bonding had worked automatically, but could only ping between node 1 and 3. XOR decision for the interfaces was not cyclic it seems, and I had no intension to try finding a solution, if any.

U-L-What?

During this adventure I came across ULA’s in a blog post. So

while at it I chose myself a subnet: fd0c:b219:7c00::/48

[fdXc:bug:kXX::/48 if one squint’s their eyes hard enough]. Here is

the declaration,

I ask the globe to honor my rightful claim for the subnet. Anyone who opposes

should apply for a duel of rock-paper-scissors and bring their own hand.

Now what?

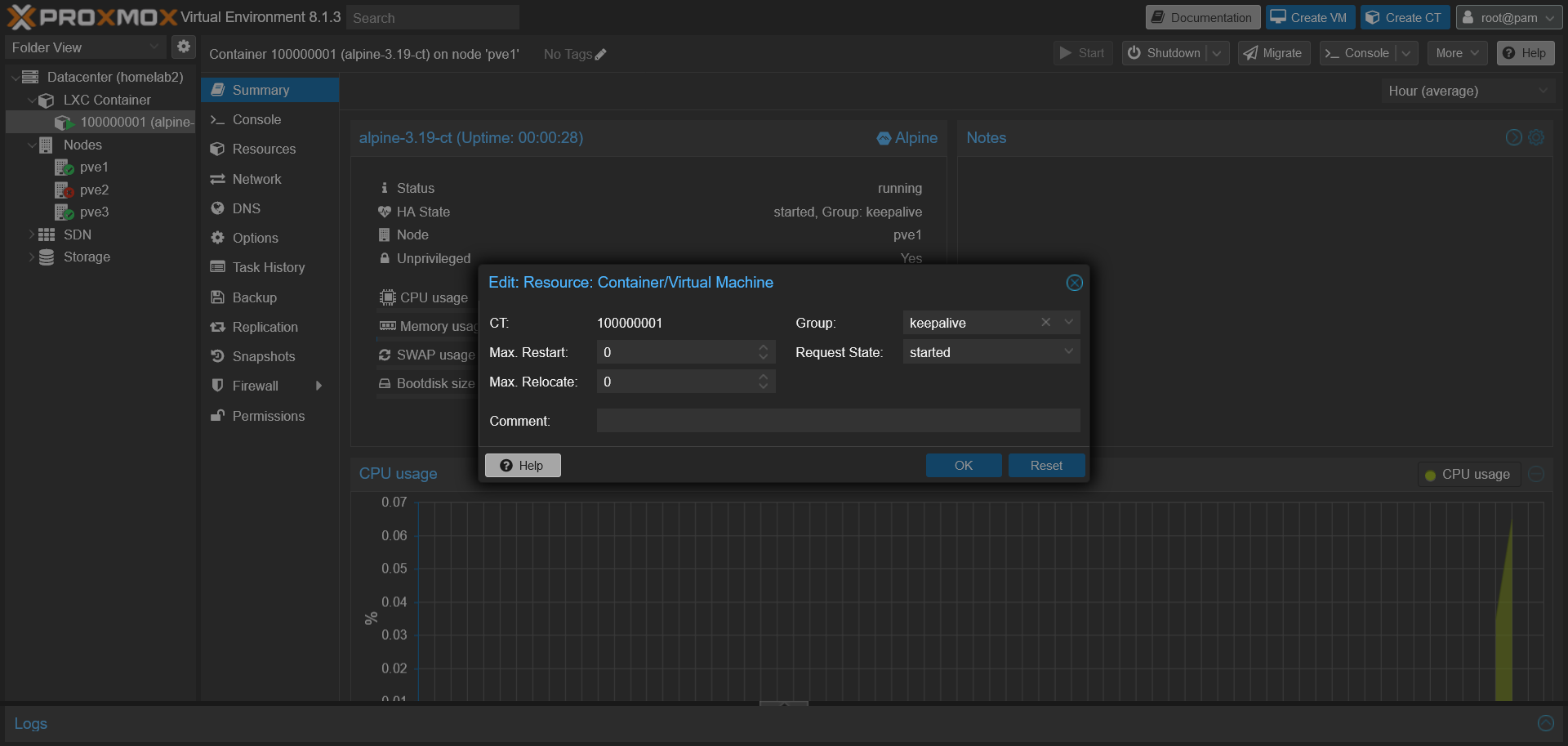

At this point creating a CEPH pool for use of VMs/CTs is trivial. Then setting an HA policy and tweaking retension time, etc. would allow for resilliency through redundancy. Here is a LXC container automatically migrated from pve2 to pve1 after a shut down.

Bibliography

- Proxmox Full Mesh - Routed (Simple)

- Michael Hampton’s SuperUser answer for IPv6

- Rosta Kosta’s StackExchange answer for IPv4

- A.B’s comprehensive ServerFault answer, a must read

- Blog post about ULA addresses and how they compare to private IPv4

- My embarrassing question on isolcpus alternative